Accessing web services with cURL

ChEMBL web services are really friendly. We provide live online documentation, support for CORS and JSONP techniques to support web developers in creating their own web widgets. For Python developers, we provide dedicated client library as well as examples using the client and well known requests library in a form of ipython notebook. There are also examples for Java and Perl, you can find it here.

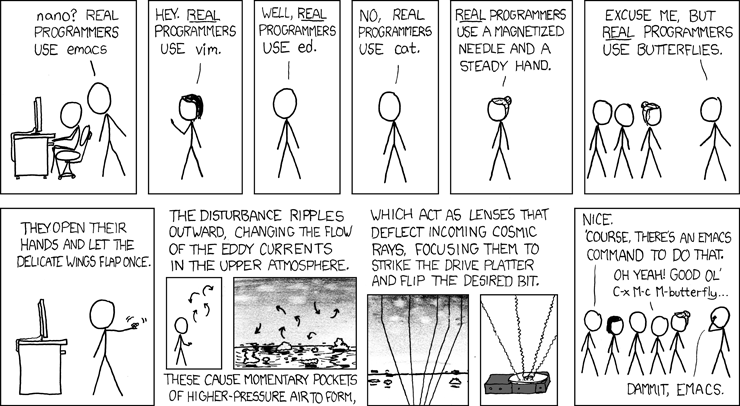

But this is nothing for real UNIX/Linux hackers. Real hackers use cURL. And there is a good reason to do so. cURL comes preinstalled on many Linux distributions as well as OSX. It follows Unix philosophy and can be joined with other tools using pipes. Finally, it can be used inside bash scripts which is very useful for automating tasks.

Unfortunately first experiences with cURL can be frustrating. For example, after studying cURL manual pages, one may think that following will return set of compounds in json format:

But the result is quite dissapointing...

The reason is that

--data-urlencode (-d) tells our server (by setting Content-Type header) that this request parameters are encoded in "application/x-www-form-urlencoded" - the default Internet media type. In this format, each key-value pair is separated by an '&' character, and each key is separated from its value by an '=' character for example:This is not the format we used. We provided our data in JSON format, so how do we tell the ChEMBL servers the format we are using? It turns out it is quite simple, we just need to specify a Content-Type header:

If we would like to omit the header, correct invocation would be:

OK, so request parameters can be encoded as key-value pairs (default) or JSON (header required). What about result format? Currently, ChEMBL web services support JSON and XML output formats. How do we choose the format we would like the results to be returned as? This can be done in three ways:

1. Default - if you don't do anything to indicate desired output format, XML will be assumed. So this:

will produce XML.

2. Format extension - you can append format extension (.xml or .json) to explicitly state your desired format:

will produce JSON.

3. `Accept` Header - this header specifies Content-Types that are acceptable for the response, so:

will produce JSON.

Enough boring stuff - Lets write a script!

Scripts can help us to automate repetitive tasks we have to perform. One example of such a task would be retrieving a batch of first 100 compounds (CHEMBL1 to CHEMBL100). This is very easy to code with bash using curl (Note the usage of the -s parameter, which prevents curl from printing out network and progress information):

Executing this script will return information about first 100 compounds in JSON format. But if you carefully inspect the returned output you will find that some compound identifiers don't exist in ChEMBL:

We need to add some error handling, for example checking if HTTP status code returned by server is equal to 200 (OK). Curl comes with

--fail (-f) option, which tells it to return non-zero exit code if response is not valid. With this knowledge we can modify our script to add error handling:OK, but the output still looks like a chaotic soup of strings and brackets, and is not very readable...

Usually we would use a classic trick to pretty print json - piping it through python:

But it won't work in our case:

Why? The reason is that python trick can pretty-print a single JSON document. And what we get as the output is a collection of JSON documents, each of which describes different compound and is written in separate line. Such a format is called Line Delimited JSON and is very useful and well known.

Anyway, we are data scientists after all so we know a plenty of other tools that can help. In this case the most useful is jq - "lightweight and flexible command-line JSON processor", kind of sed for JSON.

With jq it's very easy to pretty print our script output:

Great, so we finally can really see what we have returned from a server. Let's try to extract some data from our JSON collection, let it be chemblId and molecular weight:

Perfect, can we have both properties printed in one line and separated by tab? Yes, we can!

So now we can get the ranking of first 100 compounds sorted by their weight:

Exercises for readers:

1. Can you modify compounds.sh script to accept a range (first argument is start, second argument is length) of compounds?

2. Can you modify the script to read compound identifiers from a file?

3. Can you add a 'structure' parameter, which accepts a SMILES string. When this 'structure' parameter is present, the script will return similar compounds (you can decide on the similarity cut off or add an extra parameter)?