Unpacking a GPU computation server...Leviathan unleashed

What / why?

As you might know, EMBL-EBI has a very powerful cluster. Yet some time ago we were running into some limitations and were pondering on how great it would be if we had the ability to run more concurrent threads in a single machine (avoiding the bottleneck that inevitably appears on the network for some jobs).

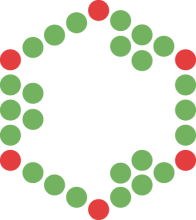

It turns out there is an answer, namely in the form of a GPU (graphics processing unit). This is the same type of chip that creates 3D graphics for games in your home PC / laptop. While the capabilities of individual calculation cores are relatively limited on GPUs compared to CPUs, they can have a massive amount of them in order to generate 3D environments at the speeds of 60 frames per second. Schematically it looks like this (CPU left, GPU right):

As you can see, the CPU can handle 8 threads concurrently, whereas the GPU can handle 2880 (see also this great youtube video by the myth busters). We have all kinds of ideas of calculations we want to run on the GPUs (that have shown to work well in MD), but now first ... the geek tradition that is unboxing!

Nvidia

The guys at Nvidia were very generous and provided us with 5 GPUs (thanks to Mark Berger and Timothy Lanfaer). Tim was also very quick with technical questions concerning the hardware specs needed and software troubleshooting. Thanks again!

Unpacking

At the EMBL-EBI people typically work with laptops or thin clients, and the cluster consists of blades so there was no place to put our GPUs. Yet, after a quick investigation we had a list of hardware we wanted and a big box was delivered two weeks ago !

Time to unpack...

So after opening and removing the hardware, we had a tower / 4u rackmountable chasis

Next up, placement of the GPUs inside the chassis:

Some tinkering was in order:

And finally we could boot and install the OS. We choose Ubuntu 12.04 LTS because of the stability, and availability many packages (with source code).

Leviathan?

Just one question remains, why 'Leviathan'?

Given the availability of python based cuda packages, we will probably start there. Hence our server we be a very powerful incarnation of python, and what's more awe-inspiring than the Leviathan?

CUDA running

After some trouble getting the drivers to work (we use Ubuntu 12.04 LTS), Michal got everything up and running!

Potential projects

Some of the projects we will be starting with are CUDA based random forests, similarity matrix calculations, and compound clustering. If you have a good idea and would like to collaborate and co-publish, please contact us via email!

Specs

The server contains the following hardware:

Case: Supermicro GPU tower/4U server

PSU: 1,620W Redundant PSU

CPUs: 2*Intel Xeon E5-2603 1.8GHz 4core

RAM: 8*8GB Reg ECC DDR3 1600MHz

Disk: 1*2TB 3.5” SATA HDD

GPUs: 1*Tesla K40; 2*Tesla K20 (one extra to be added later)

Michal & Gerard